Engineers build longer-lasting qubit

In a major step towards practical quantum computers, Princeton engineers have built a superconducting qubit that lasts three times longer than today’s best versions.

In an article in the journal Nature, the Princeton team reported their new qubit lasts for over 1 millisecond. This is three times longer than the best ever reported in a lab setting, and nearly 15 times longer than the industry standard for large-scale processors. The researchers built a fully functioning quantum chip based on this qubit to validate its performance, clearing one of the key obstacles to efficient error correction and scalability for industrial systems.

“The real challenge, the thing that stops us from having useful quantum computers today, is that you build a qubit and the information just doesn’t last very long,” said Andrew Houck, leader of a federally funded national quantum research centre, Princeton’s dean of engineering and co-principal investigator on the paper. “This is the next big jump forward.”

The new qubit design is similar to those already used by leading companies like Google and IBM, and could easily be slotted into existing processors, according to the researchers. Swapping Princeton’s components into Google’s best quantum processor, called Willow, would enable it to work 1000 times better, Houck said. The benefits of the Princeton qubit grow exponentially as system size grows, so adding more qubits would bring even greater benefit.

Better hardware is essential to advancing quantum computers

Quantum computers have shown the potential to solve problems that cannot be addressed with conventional computers. But current versions are still in early stages of development and remain limited. This is mainly because the basic component in quantum computers, the qubit, fails before systems can run useful calculations. Extending the qubit’s lifetime, called coherence time, is essential for enabling quantum computers to perform complex operations. The Princeton qubit reportedly marks the largest single advance in coherence time in more than a decade.

“This advance brings quantum computing out of the realm of merely possible and into the realm of practical,” Houck said. “Now we can begin to make progress much more quickly. It’s very possible that by the end of the decade we will see a scientifically relevant quantum computer.”

While engineers are pursuing a range of technologies to develop qubits, the Princeton version relies on a type of circuit called a transmon qubit. Transmon qubits, used in efforts by companies including Google and IBM, are superconducting circuits that run at extremely low temperatures. Their advantages include a relatively high tolerance for outside interference and compatibility with current electronics manufacturing.

But the coherence time of transmon qubits has proven extremely hard to extend. Recent work from Google showed that the major limitation faced in improving their latest processor comes down to the material quality of the qubits.

The Princeton team took a two-pronged approach to redesigning the qubit. First, they used a metal called tantalum to help the fragile circuits preserve energy. Second, they replaced the traditional sapphire substrate with high-quality silicon, the standard material of the computing industry. To grow tantalum directly on silicon, the team had to overcome a number of technical challenges related to the materials’ intrinsic properties. But they prevailed, unlocking the deep potential of this combination.

Nathalie de Leon, the co-principal investigator of the new qubit, said that not only does their tantalum–silicon chip outperform existing designs, but it’s also easier to mass-produce. “Our results are really pushing the state of the art,” she said.

Michel Devoret, Chief Scientist for Hardware at Google Quantum AI, which partially funded the research, said that the challenge of extending the lifetimes of quantum computing circuits had become a “graveyard” of ideas for many physicists. “Nathalie really had the guts to pursue this strategy and make it work,” Devoret said.

Using tantalum makes quantum chips more robust

Houck said a quantum computer’s power hinges on two factors. The first is the total number of qubits that are strung together. The second is how many operations each qubit can perform before errors take over. By improving the quality of individual qubits, the new paper advances both. Specifically, a longer-lasting qubit helps resolve the industry’s greatest obstacles: scaling and error correction.

The most common source of error in these qubits is energy loss. Tiny, hidden surface defects in the metal can trap and absorb energy as it moves through the circuit. This causes the qubit to rapidly lose energy during a calculation, introducing errors that multiply as more qubits are added to a chip. Tantalum typically has fewer of these defects than more commonly used metals like aluminium. Fewer errors also make it easier for engineers to correct those that do occur.

Houck and de Leon first introduced the use of tantalum for superconducting chips in 2021 in collaboration with Princeton chemist Robert Cava, the Russell Wellman Moore Professor of Chemistry. Despite having no background in quantum computing, Cava, an expert on superconducting materials, had been inspired by a talk de Leon had delivered a few years earlier, and the two struck up an ongoing conversation about qubit materials. Eventually, Cava pointed out that tantalum could provide more benefits and fewer downsides. “Then she went and did it,” Cava said, referring to de Leon and the broader team. “That’s the amazing part.”

Researchers from all three labs followed Cava’s intuition and built a superconducting tantalum circuit on a sapphire substrate. The design demonstrated a significant boost in coherence time, in line with the world record.

Tantalum’s main advantage is that it’s exceptionally robust and can survive the harsh cleaning needed for removing contamination from the fabrication process. “You can put tantalum in acid, and still the properties don’t change,” said Bahrami, co-lead author on the new paper.

Once the contaminants were removed, the team then came up with a way to measure the next sources of energy loss. Most of the remaining loss came from the sapphire substrate. They replaced the sapphire with silicon, a material that is widely available with high purity.

Combining these two materials while refining manufacturing and measurement techniques has led to an improvement in the transmon. Because the improvements scale exponentially with system size, Houck said that swapping the current industry best for Princeton’s design would enable a hypothetical 1000-qubit computer to work roughly 1 billion times better.

Using silicon primes the new chips for industrial systems

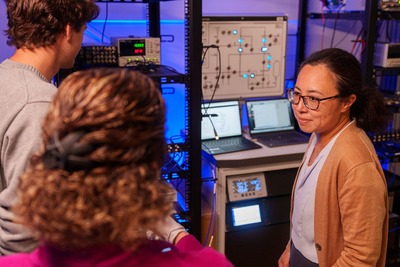

The work brings together deep expertise in quantum device design and materials science. Houck’s group specialises in building and optimising superconducting circuits; de Leon’s lab focuses on quantum metrology and the materials and fabrication processes that underpin qubit performance; and Cava’s research team has spent three decades at the forefront of superconducting materials. Combining their expertise has yielded results that couldn’t have been accomplished alone. These results have now attracted industry attention.

Devoret, a professor of physics at the University of California-Santa Barbara, said that partnerships between universities and industry are important for advancing the frontiers of technology. “There is a rather harmonious relationship between industry and academic research,” he said. University labs are well positioned to focus on the fundamental aspects that limit the performance of a quantum computer, while industry scales up those advances into large-scale systems.

“We’ve shown that it’s possible in silicon,” de Leon said. “The fact that we’ve shown what the critical steps are, and the important underlying characteristics that will enable these kinds of coherence times, now makes it pretty easy for anyone who’s working on scaled processors to adopt.”

Diamond thermal management for high-power electronics

Researchers have unveiled a scalable method to grow patterned diamond films directly on wafers,...

What does cybersecurity look like in the quantum age?

As quantum computers are increasingly integrated into daily life, it will become critical to...

Transforming acoustic waves with a chip

Researchers have developed a chip that uses on-chip phased interdigital metamaterials to shape...